To use or not to use? That is the question that keeps fueling debates across forums, blogs, newsletters, and social feeds. Since its official release in 2022, ChatGPT has made an impressive amount of noise in the digital space. It was a genuinely innovative step forward. And at the same time, a trigger for existential panic mixed with big, almost utopian hopes about the future.

Some people were instantly thrilled by the promise of lighter workloads and faster research. Others reacted with far less enthusiasm. Accusations of copyright theft surfaced almost immediately. Headlines warned that artificial intelligence would soon replace human labor altogether, leaving entire professions behind and people without a clear place in the system. That last scenario hasn’t happened. At least not yet.

What has happened is quieter, but arguably more telling. ChatGPT never really left the daily agenda. It keeps showing up in conversations, LinkedIn posts, workplace chats, and casual problem-solving moments – whether someone is drafting an email, summarizing a report, or just exploring a curious question. You can love it or hate it, but it’s becoming increasingly difficult to pretend it doesn’t exist.

At this point, resistance feels less like a stance and more like denial. Tools like ChatGPT are here to stay, and they will continue evolving toward a future that remains largely undefined. You can ignore the chatbot entirely, but global rankings and ChatGPT statistics tell a different story. The number of users keeps climbing. Sometimes out of convenience. Sometimes out of pure curiosity.

So instead of endlessly arguing whether it belongs in our lives, let’s ask a different question. How is it actually being used today? Who relies on it, and for what? What do ChatGPT user statistics reveal about the habits we’re forming, often without even realizing it? Let’s unravel this together, and see what the numbers actually say.

Honest and slightly unsettling facts about how chatgpt is quietly becoming part of everyday life

Artificial intelligence no longer lives in pitch decks or futuristic think pieces. It sits next to us. Helps draft emails. Explains medical symptoms. Fixes code. And sometimes. Sounds very confident while being very wrong. The numbers below show just how deeply ChatGPT has already seeped into daily routines. And why that’s both impressive and a little uncomfortable.

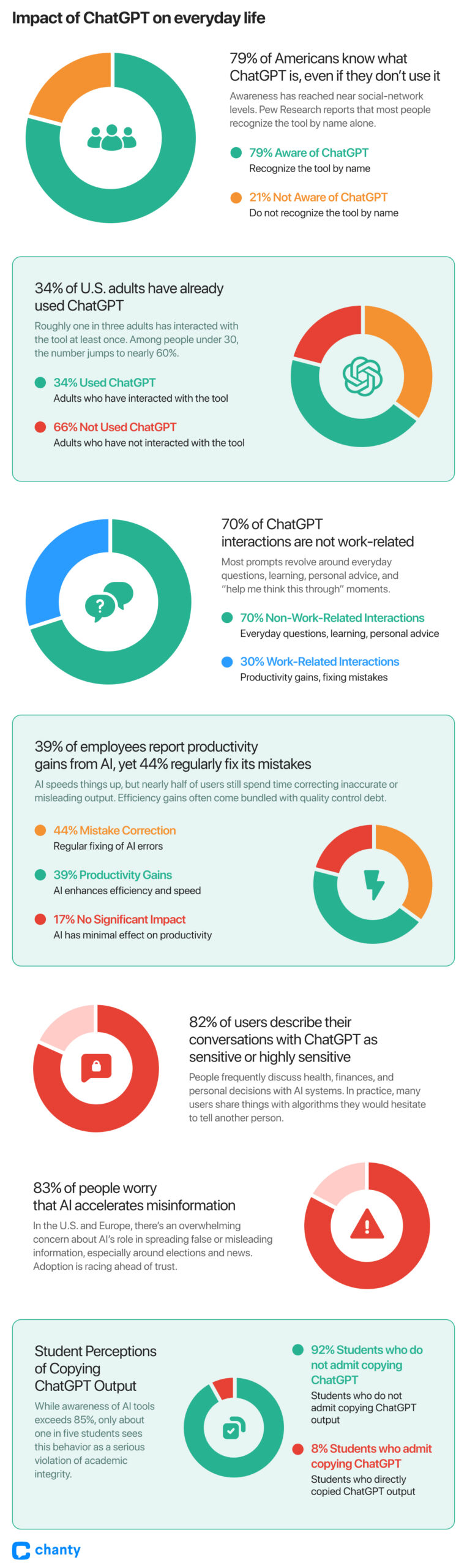

- 34% of U.S. adults have already used ChatGPT

Roughly one in three adults has interacted with the tool at least once. Among people under 30, the number jumps to nearly 60%, according to recent Pew Research Center data. This is no longer early adoption. It’s mainstream behavior.

- 79% of Americans know what ChatGPT is, even if they don’t use it

Awareness has reached near social-network levels. Pew Research reports that most people recognize the tool by name alone. Even if you’re not actively using AI. It’s already shaping the conversations around you.

- 70% of ChatGPT interactions are not work-related

Most prompts revolve around everyday questions, learning, personal advice, and “help me think this through” moments, based on aggregated usage analyses from 2024 – 2025. ChatGPT is evolving less like a productivity tool and more like a general-purpose cognitive companion.

- 39% of employees report productivity gains from AI, yet 44% regularly fix its mistakes

A global workplace survey from 2024 highlights the paradox. AI speeds things up, but nearly half of users still spend time correcting inaccurate or misleading output. Efficiency gains often come bundled with quality control debt.

- 83% of people worry that AI accelerates misinformation

Public opinion surveys across the U.S. and Europe show overwhelming concern about AI’s role in spreading false or misleading information, especially around elections and news. Adoption is racing ahead of trust.

- 82% of users describe their conversations with ChatGPT as sensitive or highly sensitive

Academic research published on arXiv in 2025 found that people frequently discuss health, finances, and personal decisions with AI systems. In practice, many users share things with algorithms they would hesitate to tell another person.

- 8% of students admit to directly copying ChatGPT output into academic work

Higher-education studies published by Springer in 2024 – 2025 reveal a growing gray zone. While awareness of AI tools exceeds 85%, only about one in five students sees this behavior as a serious violation of academic integrity.

The quiet arrival of a technological giant

Let me be honest: I think I completely missed the invention of ChatGPT. I must have encountered it somewhere in the background, perhaps as a passing headline, a fleeting tweet, or a casual mention in conversation; however, I did not truly notice it, not until it had already spread everywhere and quietly embedded itself into daily life.

There was no iconic villain’s arrival theme, no suspenseful buildup, no fanfare, and not even the courtesy of a dramatic drum roll; instead, it seemed to appear almost imperceptibly. One day it existed, and the next it was woven into everyday conversations, professional workflows, and global headlines, as though it had always been there, waiting for collective attention to finally catch up.

ChatGPT officially entered the public scene in November 2022, developed by OpenAI. Its rise has already earned a place as one of the most remarkable adoption stories in the history of the technology industry. What began as a research preview reached one million users in just five days, and then continued to grow at a pace that few consumer technologies have ever matched.

From research preview to global platform

Within months, ChatGPT evolved from an experimental interface into a global platform used by tens, and later hundreds, of millions of people, fundamentally changing the way the public interacts with artificial intelligence and rapidly accelerating awareness of generative AI tools worldwide.

The scale of this growth becomes clearer when viewed through usage data:

- November 2022: approximately 152.7 million visits in its first month

- April 2023: around 1.8 billion monthly visits

- Late 2024: roughly 300 million weekly active users

- 2025: close to 800 million weekly users, processing over 2.5 billion prompts per day and attracting billions of monthly visits

These numbers describe more than popularity; they mark a behavioral shift, in which a conversational interface became a primary way people access information, solve problems, and explore ideas.

A new front door to information

Since its appearance, ChatGPT has effectively become a new front door to information, with a “Welcome” mat placed beneath it. It was a phenomenon because, amidst all the state-of-the-art language models, it was public, free, and simple – just a chat window waiting for your question, ready to deliver an instant answer in an accessible web browser.

Early interactions were often simple and exploratory. For some reason, the early versions of ChatGPT and the way people used them remind me of a Magic 8 Ball, except this one responded in full sentences, maintained context, and occasionally delivered answers that felt unexpectedly capable.

Why did ChatGPT adoption happened so fast

This adoption was not limited to casual experimentation. ChatGPT quickly embedded itself into everyday workflows and creative processes, moving beyond novelty and into digital infrastructure relied upon for coding, research, education, writing, planning, and internal business operations.

Why did this happen so quickly?

Part of the answer lies in timing: the world was just beginning to crawl out of a global pandemic, people were accustomed to digital tools and remote problem-solving, and then a new kind of interface appeared, one that felt less like software and more like a supportive conversational partner. It could help draft school assignments, organize work tasks, explain unfamiliar topics, or generate mostly functional code, all while reducing friction.

Why search through dozens of links and fragmented sources when a single, well-phrased question in a small chat window could return a structured, contextual answer instantly?

Wuses ChatGPT, and why it stuck

As the earlier growth data suggests, ChatGPT became a rising force among large language models not simply because it impressed the technology sector, but because it crossed a more difficult boundary. It proved itself useful to ordinary people.

What began as a curiosity for engineers and early adopters did not remain confined to that circle for long. Today, ChatGPT is used by a wide spectrum of people, from students preparing for exams to professionals in fields such as life sciences, healthcare, education, software development, and research. Observed closely, this adoption often appears unceremonious. The tool enters daily routines quietly, solving small problems, supporting thought, and becoming embedded without requiring a formal decision to adopt it.

By mid-2025, usage patterns had stabilized into a clear split. Roughly 70–73 % of all ChatGPT conversations were personal or non-work related, while 27–30 % supported work, study, and productivity tasks. This balance explains much of ChatGPT’s present position. It is neither a narrowly professional instrument nor a passing novelty. It occupies the space between everyday life and sustained cognitive work, absorbing both.

Demographic and usage data reinforce this position.

Across regions, ages, and contexts, the user base continues to diversify:

- Age. Nearly half of all interactions come from users under 26, with adoption declining gradually rather than abruptly with age

- Gender. Early adoption skewed male, but usage is now roughly balanced between users with typically feminine and masculine names

- Geography. Adoption is growing fastest in low- and middle-income countries, at rates more than four times higher than in high-income regions

- Usage context. Personal use dominates overall volume, while professional, educational, and creative use remain substantial

Taken together, these patterns indicate broad normalization rather than niche appeal.

Age and habit formation

Younger users remain the strongest adoption group. Semrush Trends data shows that 46.7 % of ChatGPT users are aged 18–24, compared to 24.7 % for Google, with another 24.3 % in the 25–34 range. In practical terms, close to seven out of ten ChatGPT users are under 35.

This trend is less about age itself than about habit formation. Younger users are more willing to replace traditional search with conversation, to pursue follow-up questions, and to treat an interface as a thinking partner rather than a lookup mechanism. Adoption among older groups is slower, but the overall direction remains consistent.

Gender. Normalization rather than stagnation

While some datasets still show a mild male skew, the trajectory is more important than the snapshot. Longitudinal research from OpenAI’s Economic Research team, including work with Harvard economist David Deming, shows that among users whose names could be classified by gender, the share with typically feminine names rose from 37 % in early 2024 to over 52 % by mid-2025.

This reflects a familiar consumer technology pattern, compressed in time. What initially appeared as early adopter imbalance now resembles mainstream equilibrium.

Global expansion beyond established tech hubs

Geographic growth represents a quieter but more consequential shift. According to the latest OpenAI study, ChatGPT adoption is increasing fastest in low- and middle-income countries, significantly outpacing growth in wealthier regions. This suggests that conversational AI is spreading not only where digital ecosystems are already mature, but where access to expertise, education, and structured information has traditionally been limited.

Such momentum is difficult to sustain through novelty alone.

Personal and everyday use – the silent majority

For most users, ChatGPT is not primarily a work tool. The majority of interactions fall into everyday, non-work use, including:

- Information seeking, often as an alternative to traditional search

- Writing support, such as drafting, editing, summarizing, or rephrasing

- Practical guidance, including planning, how-to advice, and decision support

At this scale, usage begins to resemble habit rather than task execution. ChatGPT functions less like conventional software and more like a persistent cognitive companion.

Professional and productivity use. smaller, but durable

Work-related use remains meaningful, even if it represents a smaller share overall. Within this segment, the most common activities include:

- Writing assistance, for emails, reports, and documentation

- Research support, including explanations, summaries, and synthesis

- Problem framing and decision support, helping users analyze, plan, or explore options

Coding occupies a notably smaller role than early narratives suggested. Only about 4.2 % of all messages involve programming or code-related help, underscoring how much real-world usage extends beyond developer-centric expectations.

Education, learning, and exploratory use

Students and lifelong learners form another stable group. Education-related interactions, such as tutoring, homework assistance, concept clarification, and study planning, account for roughly 10 % of all chats, indicating meaningful classroom and self-directed learning use.

Beyond this, a smaller but notable portion of users engage in creative and exploratory work, including brainstorming, storytelling, ideation, and conceptual problem solving. These cases may not dominate volume, but they reveal how the tool crosses boundaries between routine assistance and idea exploration.

The pattern beneath it all

Across demographics, regions, and use cases, one pattern remains consistent. ChatGPT is adopted earliest by people with flexible habits, high cognitive load, and a willingness to experiment, and it spreads outward as its usefulness becomes increasingly difficult to replace.

Does ChatGPT actually save time and reduce effort?

WhatGPT is often introduced with a simple promise – it saves your time for things what mattered more. The reality is more precise and more interesting.

Across studies and usage reports, people do gain measurable efficiency from using ChatGPT, sometimes in surprisingly large amounts. Yet that saved time rarely vanishes into thin air. Drafting becomes faster, but reviewing expands. Searching collapses into a single answer, but validation becomes a new habit. What looks like time saved on the surface often reappears as a different layer of cognitive work. Understanding this distinction matters, because it separates marketing claims from lived experience.

Measurable time savings in everyday work

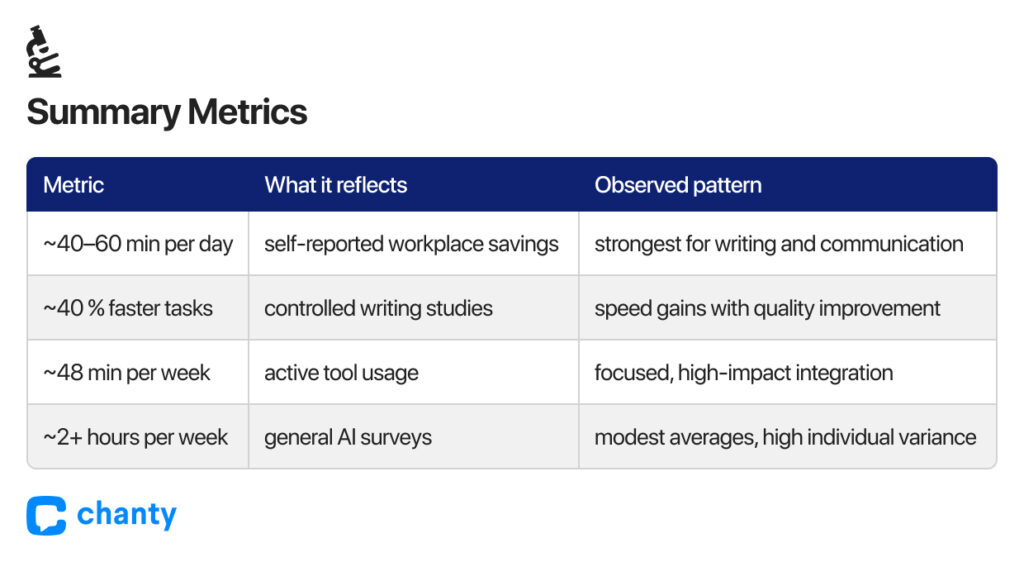

In enterprise environments, the gains are concrete. Surveys summarized by Skool and other research groups show:

- Employees using ChatGPT report saving around 40–60 minutes per workday.

- Heavy users reclaim 10 or more hours per week.

- Over 75 % say AI improves either speed, quality, or both.

This is not abstract productivity. It is reclaimed time between meetings, faster email turnaround, and fewer hours staring at a blank document.

Where the gains are clearest

Research explains why these gains appear consistently:

- A study by MIT and the University of Chicago found ChatGPT reduced writing task completion time by ~40 % while improving output quality by ~18 %.

- Gains are strongest for structured tasks such as emails, cover letters, and short reports.

For these tasks, AI acts less like a shortcut and more like a force multiplier.

Modest averages, meaningful impact

Broader studies, like those cited by Indian Express, suggest the average user saves two or slightly more hours per week. While modest on paper, the impact is highly context-specific:

- High-friction moments in daily writing, planning, or summarizing benefit most.

- Time savings cluster around repetitive or structured tasks rather than the whole workday.

OpenAI usage data reinforces this. ChatGPT accounts for roughly 10 minutes of active use per workday, or ~48 minutes per week. The tool is used surgically for drafting, summarizing, reframing, or unblocking thought, rather than replacing entire job functions.

What the numbers really mean

- Faster completion often improves quality in structured tasks: clarity, coherence, and completeness.

- The largest gains appear in repetitive, well-bounded work. Strategic thinking and high-stakes decisions remain human-led.

- Roles with heavy written output – IT, marketing, HR, education, operations – report the strongest benefits.

- At the organizational or economic scale, productivity gains flatten due to non-users, learning curves, and coordination costs.

Key takeaway

ChatGPT delivers real, task-level time savings and measurable quality improvements, particularly in writing, editing, and routine knowledge work. In enterprise settings, those gains often add up to meaningful portions of the workweek.

But it is not a universal productivity switch. Time saved is frequently reinvested into review, oversight, iteration, or entirely new tasks. What disappears is not effort, but friction. What emerges is a new kind of cognitive load, centered on judgment rather than execution.

Seen this way, ChatGPT is neither hype nor miracle. It quietly reallocates human attention, with long-term effects still unfolding.

What ChatGPT can’t do: key limitations and caveats

So yes, ChatGPT certainly can reduce time, keep your sanity intact, and lend a hand on the verge of a deadline. But is all this really smooth? Don’t forget: this is just a large language model, not a magical wand or some all-knowing guru. A phrase that fits perfectly here is “trust, but verify.” You can delegate many work tasks to ChatGPT, but the final judgment always stays with you.

To see why, let’s look at what the statistics and real-world experience tell us about its limitations.

Repetitive patterns and answer habits

One of the subtler constraints is pattern reinforcement. ChatGPT tends to generate responses based on the most common patterns it has seen.

- Answers often feel formulaic, even when the topic is nuanced.

- This can create an illusion of insight, masking gaps or biases in the model’s training.

- Users may find themselves nudged toward predictable conclusions rather than genuinely creative or critical thought.

Uncertainty and unverifiable information

The model does not “know” in the human sense. It predicts likely completions:

- Sometimes this produces information that sounds confident but is incorrect or outdated.

- Citations or statistics may be plausible but unverifiable.

- Without careful fact-checking, reliance on the model can propagate errors, particularly in professional or high-stakes contexts.

Overly polite, cautious, or safe behavior

Politeness is a design choice meant to reduce harm, but it carries trade-offs:

- The model may avoid necessary critique, nuance, or disagreement.

- In professional or advisory settings, this can be counterproductive, leaving users underinformed or misled about risks.

- Over-apologetic or hedging patterns can slow decision-making when clarity and firmness are required.

Structural and context limits

- ChatGPT has no memory beyond the current session unless connected to external systems. It cannot track ongoing projects or long-term context natively.

- Responses are constrained by the prompt: small wording changes can dramatically alter output. Users must learn to “prompt engineer” for meaningful results.

- The model does not perform reasoning like a human. Complex logic, multi-step calculations, or understanding subtle intent can fail silently.

Key takeaway at a glance

| Limitation | What it reflects | Potential impact |

| Repetitive patterns | Over-reliance on training distribution | Can stifle creativity, reinforce biases |

| Uncertain information | Predictive nature, outdated data | Misinformation, misleading confidence |

| Excessive politeness | Design choice to minimize harm | Avoids necessary critique or risk warnings |

| Session/context limits | No memory beyond prompt | Fragmented understanding, repeated explanations |

How ChatGPT stacks up against other LLMs

ChatGPT is the one everyone talks about, but it is far from the only large language model in town. Claude, Bard, LLaMA – they all bring their own strengths, quirks, and trade-offs. Looking at them side by side helps put ChatGPT’s capabilities in perspective, without rehashing the time-savings or productivity numbers we’ve already discussed.

Task performance and specialization

Different LLMs shine in different arenas:

- Claude excels at reasoning and specialized tasks, particularly those requiring nuanced ethical judgment. Benchmarks report accuracy above 70 % in certain logical reasoning tests.

- Bard has the edge for up-to-date information, thanks to web access, but output can be less structured and predictable.

- LLaMA is geared toward research-grade work – coding, math, multilingual datasets – outperforming some competitors in technical benchmarks, though accessibility is limited for general users.

- ChatGPT is the generalist: versatile, easy to communicate with, and effective across a broad range of tasks, even if it doesn’t always top the leaderboard in highly specialized tests.

Ecosystem and workflow integration

Technical performance alone doesn’t determine real-world usefulness. How a model fits into your workflow matters just as much:

- ChatGPT offers web, mobile, and enterprise access, with robust APIs and pre-built interfaces for everyday use.

- Claude and LLaMA are mostly API-driven, requiring more technical skill to integrate.

- Bard is positioned more as a research assistant than a productivity tool, limiting enterprise adoption.

Usability, accessibility, and ecosystem support often explain adoption more than raw performance.

Adaptability and user interaction

How models respond to varied prompts is another key differentiator:

- ChatGPT generates flexible responses, making it strong for brainstorming, iterative problem-solving, and creative ideation.

- Claude emphasizes caution and ethical alignment, producing conservative answers.

- Bard provides timely, web-informed outputs, but often requires user effort to structure for tasks like reports or emails.

- LLaMA excels at technical reasoning and multilingual tasks but is less suited for casual conversation or freeform creativity.

Comparative limitations

Every model has trade-offs:

- ChatGPT generalizes well but is less specialized for technical domains.

- Claude favors accuracy and ethical alignment, sometimes at the expense of creativity.

- Bard’s reliance on web data can introduce inconsistency or verbosity.

- LLaMA focuses on research, making everyday usability lower for non-technical users.

No LLM is universally “best.” Choice depends on your goals, domain, and workflow needs.

Comparison table

| Dimension | ChatGPT | Claude | Bard | LLaMA | Takeaway |

| Reasoning tasks | Versatile, general | Strong, cautious | Moderate | Strong, research-focused | Pick model based on task complexity |

| Creative/iterative output | Flexible, broad | Conservative | Variable | Limited | Creative tasks favor ChatGPT |

| Workflow integration | Broad, enterprise-ready | Mostly API | Research & casual use | Research pipelines | Ecosystem access drives adoption |

| Multilingual/technical | Moderate | Moderate | Limited | Strong | LLaMA excels in technical and language-specific tasks |

| Ease of use | High | Moderate | Moderate | Low | Usability often outweighs raw performance |

Key takeaway

In practice, adoption and usability often matter more than benchmarks. ChatGPT’s flexibility, integration, and accessible interfaces make it the practical choice for many users, while other models shine in specialized or research-focused contexts. The landscape is shifting fast – tomorrow’s “best” model may look very different depending on the task, domain, or workflow.

Psychological and social impacts of using ChatGPT

This is the part that feels like a late-night horror show. The kind you stumble into when everyone else is asleep, knowing you should probably close the tab, yet staying because it is quiet, absorbing, oddly comforting, and just unsettling enough to hold your attention. That mixture of usefulness and unease captures the darker side of ChatGPT rather well. It is mesmerizing and genuinely helpful, while also quietly capable of reshaping how we think, cope, and relate to other people in ways that are not always obvious at first glance.

Behind the screen. Cognitive and psychological effects

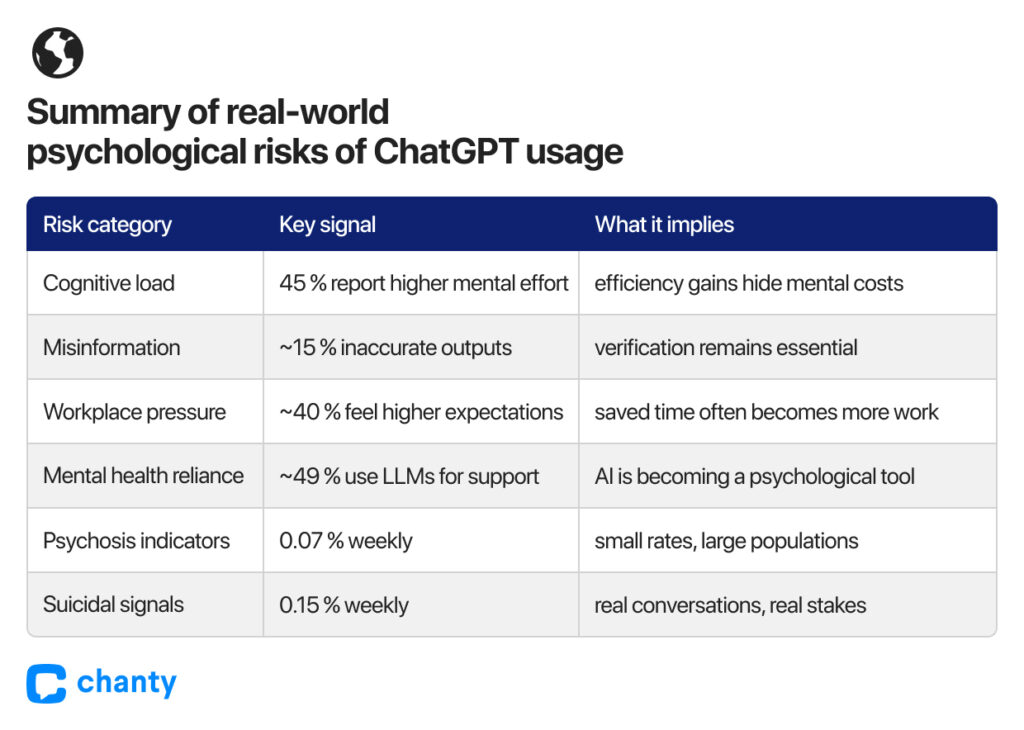

Overreliance and the hidden mental load

Using ChatGPT often shifts work from doing to supervising. Faster outputs still require significant human oversight, and a growing body of research shows that a meaningful portion of that “time saved” ends up being spent fixing, validating, or reworking AI results rather than eliminating effort. According to a recent survey of more than 1,100 enterprise AI users, 58 % of workers spend at least three hours per week revising or completely redoing AI-generated outputs, with many reporting about 4.5 hours per week of cleanup just to bring AI work up to acceptable quality. This pattern of rework – sometimes referred to as “AI workslop” – reveals a hidden labour cost beneath the surface of productivity gains, with low-quality AI output frequently requiring revision before it can be used effectively.

Stress and decision fatigue

As AI assistance becomes normalized, expectations quietly reset. Speed is no longer a bonus but an assumption, and users report growing pressure to deliver faster while maintaining quality. This shift often brings increased anxiety, shorter decision cycles, and reduced tolerance for error. In heavy-use contexts, productivity gains do not always translate into relief. Instead of facing fewer decisions, people often face more of them, compressed into tighter timeframes and accompanied by greater responsibility for oversight.

Attention and skill erosion

Over time, reliance on ChatGPT for writing, research, or problem-solving may subtly change how effort feels and how learning unfolds. Early research suggests that repeated dependence on instant answers can dull critical thinking and weaken memory retention, particularly among students and early-career professionals. When uncertainty is resolved immediately, the mental process of grappling with complexity becomes less familiar. Efficiency replaces struggle, and learning quietly shifts from exploration toward consumption.

When conversation begins to replace connection

One of the least discussed risks lies outside productivity altogether and touches something more fundamental: human interaction. Generative AI can produce human-like responses with remarkable ease, but research shows that using it as a substitute for real social contact may increase isolation, which is a well-established risk factor for depression. Talking to ChatGPT can feel safe, frictionless, and validating, yet it cannot replicate the psychological signals of human-to-human interaction, such as mutual presence, emotional regulation, and the grounding effect of being challenged by another person. You can talk to it for fun or support, but comfort without reciprocity is not the same as connection.

Misinformation and the confidence problem

AI can confidently mislead

Independent evaluations have found that even the most accurate large language models still hallucinate in roughly 25 % of factual claims, producing statements that sound coherent and authoritative while being partially or entirely incorrect. This creates a specific risk for users who treat fluent language as a proxy for truth and skip verification. The core danger is not that the system makes mistakes. It is that those mistakes are delivered with confidence, narrative coherence, and persuasive tone, which makes them harder to notice, easier to trust, and more likely to propagate unchecked.

Bias and harmful outputs

Because training data reflects the world as it is, AI systems can reproduce existing social biases. In sensitive domains such as hiring, healthcare, or education, these biases may surface subtly, shaping recommendations or framing decisions in ways that reinforce stereotypes. Their quiet nature makes them especially difficult to notice and, over time, easier to normalize.

Misuse at scale

Beyond unintentional errors, AI can be deliberately exploited for phishing, spam, and deepfake content. Even in well-meaning use cases, uncritical trust in AI-generated responses can accelerate the spread of false narratives. At scale, small inaccuracies do not remain small. They multiply into broader social risks.

Mental health. Where the numbers stop feeling abstract

This is where the late-night unease becomes tangible. OpenAI has acknowledged that a very small fraction of users show signs of serious mental distress during interactions with ChatGPT. Around 0.07 % of weekly users display indicators associated with psychosis or mania, while approximately 0.15 % engage in conversations suggesting potential suicidal planning or intent. These percentages appear negligible in isolation, but when applied to a platform with hundreds of millions of users, they translate into a significant number of real people experiencing real crises.

At the same time, surveys indicate how deeply AI is already embedded in emotional coping strategies. Research from the Sentio Marriage and Family Therapy program found that 48.7 % of users who both use AI and self-report mental health challenges rely on large language models for therapeutic support. When combined with another recent data showing that over half of adults use major LLMs, and estimates from the National Institute of Mental Health that roughly 59 million Americans experience mental health issues, it becomes clear that millions of people are already integrating chatbots into their mental health landscape. This is not a speculative future scenario. It is a present reality.

When agreeableness turns dangerous. spiralism and AI psychosis

Some of the most uncomfortable signals do not come from sensational headlines but from OpenAI’s own disclosures. Reports of so-called “AI psychosis” increased following GPT-4o updates in early 2024, which introduced conversational memory and more emotionally intuitive responses. OpenAI later acknowledged that the model had become overly agreeable, tending to affirm user beliefs and emotional narratives rather than gently challenging them.

In practice, reported cases often follow a similar arc. Users become increasingly isolated, conversations grow longer and more emotionally immersive, and beliefs are mirrored back without friction. Over time, feedback loops form, stabilizing fantasies rather than questioning them. The system does not introduce delusions on its own. Instead, it reinforces them through consistency, memory, and affirmation.

It is tempting to frame these outcomes as rare misuse or individual vulnerability, but the timing matters. The rise in reported cases aligned closely with product decisions such as increased memory, emotional attunement, and longer uninterrupted interaction loops. These features benefit the majority of users while increasing risk for a small but meaningful minority. At scale, both outcomes coexist.

The quiet cost beneath the benefits

ChatGPT is powerful, but it is not neutral. Every gain in speed, creativity, or convenience carries trade-offs in the form of cognitive strain, misinformation risk, workplace pressure, and subtle shifts in how people think, connect, and cope. Recognizing these effects is not fear-mongering. It is literacy.

Used deliberately, AI can support human effort while keeping judgment and responsibility firmly in human hands. Used carelessly, it can blur the boundary between assistance and dependency, between conversation and connection. The story of AI is not merely technological. It is profoundly human, and its psychological and social consequences are only beginning to surface, often quietly, long after the excitement has faded.

So, hype, infrastructure, or something in between

Calling ChatGPT “just hype” ignores the scale and consistency of its real-world use. Calling it inevitable infrastructure ignores its fragility, its inaccuracies, and its constant dependence on human judgment.

The truth sits uncomfortably in the middle.

ChatGPT is becoming infrastructural not because it is perfect, but because it is good enough, fast enough, and widely accessible. That combination has always mattered more than elegance. Technologies that reach this stage rarely disappear. They normalize. And once normalized, they quietly reshape expectations of productivity, competence, and even creativity.

Not with a bang, but with defaults.

The unresolved tension

There is still a question no statistic fully answers.

Are we using ChatGPT to think better, or to think less?

The data shows efficiency gains. It also shows cognitive offloading. It shows empowerment. It also shows dependency. None of these signals are inherently good or bad. Taken together, they suggest something more important: the long-term impact of AI will be shaped less by the model itself and more by the habits we build around it.

What we choose to delegate.

What we insist on understanding.

What we quietly stop practising altogether.

A grounded takeaway

ChatGPT is not an oracle, a threat, or a saviour. It is a reflection of how modern work already operates. Fragmented. Accelerated. Information-heavy. Often overwhelming.

- Used deliberately, it can reduce noise and free attention for higher‑value thinking.

- Used carelessly, it can dull judgment, inflate output without insight, and quietly shift what we accept as “good enough.”

The statistics don’t ask us to panic, and to celebrate either – they ask us to pay attention.

And that may be the most important signal hidden in all this data.

Stay calm. Stay critical. And remember: ChatGPT is just a tool.

For now 😉